Today’s companies often live or die by their network performance. Facing pressure from customers and SLA uptime demands from their clients, organizations are constantly looking for ways to improve network efficiency and deliver better, faster, and more reliable services.

That’s why edge computing architecture has emerged as an exciting new topic in the world of network infrastructure in recent years. While the concept isn’t necessarily new, developments in Internet of Things (IoT) devices and data center technology have made it a viable solution for the first time.

Edge computing relocates key data processing functions from the center of a network to the edge, closer to where data is gathered and delivered to end-users. While there are many reasons why this architecture makes sense for certain industries, the most obvious advantage of edge computing is its ability to combat latency.

Effectively troubleshooting high latency can often mean the difference between losing customers and providing high-speed, responsive services that meet their needs.

What is Latency in Networking?

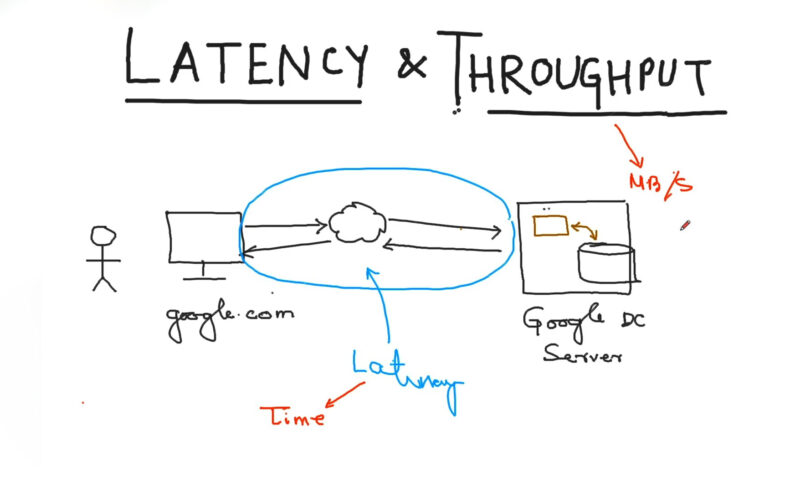

No discussion of network latency would be complete without a brief overview of the difference between latency and bandwidth. Although the two terms are often used interchangeably, they refer to very different things.

Bandwidth Definition

Bandwidth measures the amount of data that can travel over a connection at one time. The greater the bandwidth, the more data that can be delivered.

Generally speaking, increased bandwidth contributes to better network speed because more data can travel across connections, but network performance is still constrained by throughput, which measures how much data can be processed at once by different points in a network. Increasing bandwidth to a low throughput server, then, won’t do anything to improve performance because the data will simply bottleneck as the server tries to process it.

However, adding more servers to reduce congestion will allow a network to accommodate higher bandwidth.

Definition

Latency, on the other hand, is a measurement of how long it takes for a data packet to travel from its origin point to its destination. While the type of connection is a key consideration (more on that below), distance remains one of the key factors in determining latency.

That’s because data is still constrained by the laws of physics and cannot exceed the speed of light (although some connections have approached it). No matter how fast a connection may be, the data must still physically travel that distance, which takes time.

Unlike the case with bandwidth, then, adding more servers to reduce congestion will not decrease latency directly. Good network design is the best way to improve server connection over distances.

The type of connection between these points is important since data is transmitted faster over fiber optic cables than copper cabling, but distance and network complexity play a much larger role. Networks don’t always route data along the same pathway because routers and switches continually prioritize and evaluate where to send the data packets they receive.

The shortest route between two points might not always be available, forcing data packets to travel a longer distance through additional connections, all of which increase latency in a network.

Network Test

How much time? There are a few easy ways to conduct a network latency test to determine just how great of an impact latency is having on performance.

Operating systems like Microsoft Windows, Apple OS, and Linux can all conduct a “traceroute” command. This command monitors how long it takes destination routers to respond to an access request, measured in milliseconds.

Adding up the total amount of time elapsed between the initial request and the destination router’s response will provide a good estimate of system latency. Executing a traceroute command not only shows how long it takes data to travel from one IP address to another, but it also reveals how complex networking can be.

Two otherwise identical requests might have significant differences in system latency due to the path the data took to reach its destination. This is a byproduct of the way routers prioritize and direct different types of data.

The shortest route may not always be available, which can cause unexpected latency in a network.

In Gaming

Although many people may only hear about latency when they’re blaming it for their online gaming misfortunes, video games are actually a good example of explaining the concept. In the context of a video game, high latency means that it takes longer for a player’s controller input to reach a multiplayer server.

High latency connections result in significant lag or a delay between a player’s controller inputs and on-screen responses. To a player with a low latency connection, these opponents seem to be reacting slowly to events, even standing still.

From the high latency player’s perspective, other players appear to teleport all over the screen because their connection can’t deliver and receive data quickly enough to present game information coming from the server. Gamers often refer to their “ping” when discussing latency.

A ping test is similar to a “traceroute” command. The main difference is that the ping test also measures how long it takes for the destination system to respond (like a sonar “ping” being returned to the source after bouncing off an object).

A low ping means there is very little latency in the connection. It’s no surprise, then, that advice about how gamers can reduce their ping involves things like removing impediments that could slow down data packets, such as firewalls (not recommended), or physically moving their computer closer to their home’s router (probably negligible, but every little bit could help in a ranked Overwatch match).

In Streaming Services

The same latency that bedevils gamers is responsible for sputtering, fragmented streaming content. These buffering delays already occur in 29 percent of streaming experiences.

Since video content is expected to make up 82% of global internet traffic (a 15-fold increase from 2017) by 2022, high latency is a problem that could very well become even more common in the near future. Studies have shown that internet users abandon videos that buffer or are slow to load after merely two seconds of delay.

Companies that provide streaming services need to find solutions to this problem if they expect to undertake the business digital transformation that will keep them competitive in the future.

In Cloud Computing

Many organizations encounter latency when utilizing cloud computing services. Distance from servers is usually the primary culprit, but since the average business uses about five different cloud platforms, inter-cloud latency can pose significant problems for a poorly implemented multi-cloud.

Although larger cloud platforms that offer multiple services might appear immune to this problem, they can often suffer from intra-cloud latency when the virtualized architecture is not well-designed. High latency in cloud computing can have a tremendous impact on organizations.

A landmark report by Amazon in 2009 found that every 100 ms of latency cost the online retail giant one percent in sales, while a 2018 Google study discovered that even one to three seconds of load time increased the possibility of a viewer navigating away from a page by 32 percent. Given those grim figures, it’s hardly a surprise that organizations spend so much time thinking about how to reduce network latency.

How to Improve Latency

Latency is certainly easy to notice given that too much of it can cause slow loading times, jittery video or audio, or timed-out requests. Fixing the problem, however, can be a bit more complicated since the causes are often located downstream from a company’s infrastructure.

In most cases, high latency is a byproduct of distance. Although fast connections may make networks seem to work instantaneously, data is still constrained by the laws of physics.

It can’t move faster than the speed of light, although innovations in fiber optic technology allow it to get about two-thirds of the way there. Under the very best conditions, it takes data about 21 milliseconds to travel from New York to San Francisco.

This number is misleading, however. Various bottlenecks due to bandwidth limitations and rerouting near the data endpoints (the “last mile” problem) can add between 10 to 65 milliseconds of latency.

Reducing the physical distance between the data source and its eventual destination is the best strategy for how to fix latency. For markets and industries that rely on the fastest possible access to information, such as IoT devices or financial services, that difference can save companies millions of dollars.

Latency arbitrage, a notorious practice in high-frequency financial investment markets that involves exploiting “lags” in financial systems, has been shown to cost investors as much as $5 billion annually. Speed, then, can provide a significant competitive advantage for organizations willing to commit to it.

How to Reduce Latency With Edge Computing

Edge computing architecture offers a groundbreaking solution to the problem of high latency and how to reduce it. By locating key processing tasks closer to end users, edge computing can deliver faster and more responsive services.

IoT devices provide one way of pushing these tasks to the edge of a network. Advancements in processor and storage technology have made it easier than ever to increase the power of internet-enabled devices, allowing them to process much of the data they gather locally rather than transmitting it back to centralized cloud computing servers for analysis.

By resolving more processes closer to the source and relaying far less data back to the center of the network, IoT devices can greatly improve performance speed. This will be critically important for technology like autonomous vehicles, where a few milliseconds of lag could be the difference between a safe journey to a family gathering and a fatal accident.

Of course, not every business digital transformation will be delivered by way of IoT devices. Video streaming services, for example, need a different kind of solution.

Edge data centers, smaller, purpose-built facilities located in key emerging markets, make it easier to deliver streaming video and audio by caching high-demand content much closer to end users. This not only ensures that popular services are delivered faster, but also frees up bandwidth to deliver content from more distant locations.

For instance, if the top ten Netflix shows are streaming from a hyperscale facility in New York City, but are able to cache that same content in an edge facility outside of Pittsburgh, end users in both markets will be able to stream content more efficiently because the streaming sources are distributed closer to consumers. Online-based gaming experiences can also help reduce latency for their users by placing servers in edge data centers closer to where gamers are located.

If players in a particular region are logged into servers that can be reached with minimal latency, they will have a much more enjoyable experience than if they were constantly struggling to deal with the high ping rates that result from using servers on the other side of the country.

Additional Tips and Tools for Troubleshooting

While simply reducing the distance data has to travel is often the best way of improving network performance, there are a few additional strategies that can substantially reduce network latency.

Multiprotocol Label Switching (MPLS)

Effective router optimization can also help to reduce latency. Multiprotocol label switching (MPLS) improves network speed by tagging data packets and quickly routing them to their next destination.

This allows the next router to simply read the label information rather than having to dig through the packet’s more detailed routing tables to determine where it needs to go next. While not applicable to every network, MPLS can greatly reduce latency by streamlining the router’s tasks.

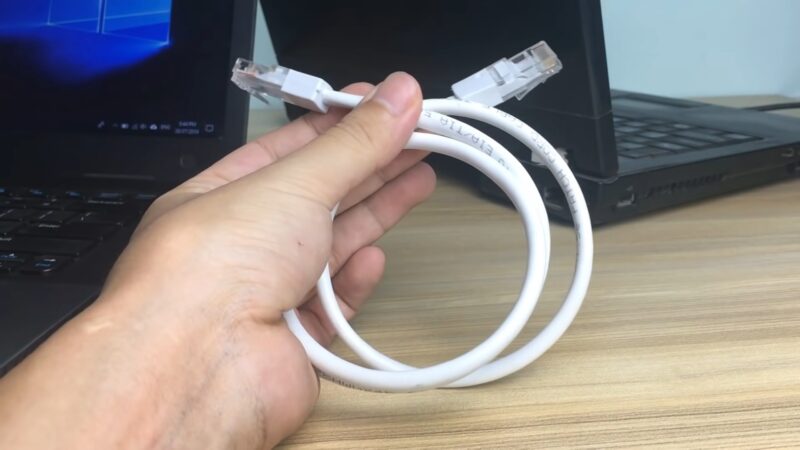

Cross-Connect Cabling

In a carrier-neutral colocation data center, colocation customers often need to connect their hybrid and multi-cloud networks to a variety of cloud service providers. Under normal circumstances, they connect to these services through an ISP, which forces them to use the public internet to make a connection.

Colocation facilities offer cross-connect cabling, however, which is simply a dedicated cable run from a customer’s server to a cloud provider’s server. With the distance between the servers often measured in mere feet, latency is greatly reduced, enabling much faster response times and better overall network performance.

Direct Interconnect Cabling

When cross-connect cabling in a colocation environment isn’t possible, there are other ways to streamline connections to reduce latency. Direct connections to cloud providers, such as Microsoft Azure ExpressRoute, may not always resolve the challenges posed by distance, but the point-to-point interconnect cabling means that data will always travel directly from the customer’s server to the cloud server.

Unlike a conventional internet connection, there’s no path routing to consider, which means the data will not be redirected every time a packet is sent through the network.

Software Defined Networking

Building a multi-cloud deployment that provides access to a variety of cloud providers can significantly expand an organization’s digital capabilities, but also exposes them to latency risks if that network isn’t well-designed. Luckily, software-defined network (SDN) service providers make it easier to create dynamic, low-latency multi-cloud environments.

Rather than connecting to each provider individually, companies can instead connect to a single SDN provider and then choose from a selection of available cloud providers. In most instances, the SDN provider will have an efficient network architecture in place that provides low-latency data access.

Connecting to an SDN within a colocation environment provides an additional layer of reliable and efficient connectivity infrastructure that will keep latency and bandwidth issues to a minimum.

FAQ

How does edge computing differ from traditional cloud computing?

Edge computing processes data closer to the source of data generation (like IoT devices) rather than sending it to centralized cloud servers. This reduces latency and improves performance.

What is the primary cause of network latency?

The primary cause of network latency is the physical distance that data has to travel. Other factors include the type of connection, network complexity, and the number of routers or switches the data must pass through.

Why is latency a concern in online gaming?

High latency in online gaming can result in lag, where there’s a noticeable delay between a player’s actions and the game’s response. This can affect gameplay and the overall gaming experience.

How does latency affect streaming services?

High latency can cause buffering delays in streaming, leading to a disrupted viewing experience. With the growth of video content online, addressing latency issues is crucial for streaming service providers.

What is MPLS and how does it help in reducing latency?

Multiprotocol Label Switching (MPLS) improves network speed by tagging data packets for quicker routing, reducing the time data spends in transit.

How do direct interconnects differ from regular internet connections?

Direct interconnects provide a point-to-point connection between servers, ensuring data travels directly without being rerouted, reducing latency.

What is the role of software-defined networking in latency reduction?

Software-defined networking allows for dynamic, efficient network architectures that can provide low-latency access to multiple cloud providers.

Final Words

In today’s digital age, where every millisecond counts, understanding and addressing network latency is paramount. As industries evolve and the demand for real-time data processing grows, solutions like edge computing become increasingly relevant.

By bringing data processing closer to the source, we not only enhance user experiences across various platforms but also pave the way for innovations that were once deemed impossible due to latency constraints.